AzureDataStudio Blog series

1) Azure Data Studio and SQL Saturdays

2) ADS Keyboard Short cuts – part 1

3) ADS Keyboard Shortcut and Name drop

4) ADS Server and Switching Profiles – Setting up ability to switch in different dashboards, user profiles

5) Azure Data Studio – Database Status Dashboards – Steps to create a dashboard and clever trick to run a script across different SQL versions with different syntax

6) ADS Extensions – Brief intro to extensions

7) SQL Saturday Belgium – My 1st SQL European SQL Saturday experience

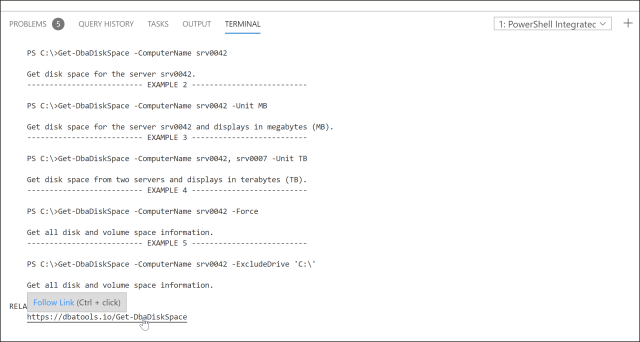

8) ADS Error switching in PowerShell– with switching to PowerShell Kernel in Notebooks and fix

9) Azure Data Studio Notebooks Markup– Annotating notebooks, headers, lists, and formatting, with a clever hack to get around text alignment issues

10) Azure Data Studio – wow more time saving – Searching for servers or adding in new servers

11) Leeds and Manchester Data Platform Meetup AzureDataStudio – Trip over the Pennines and another great feature to compare clipboard and the active window

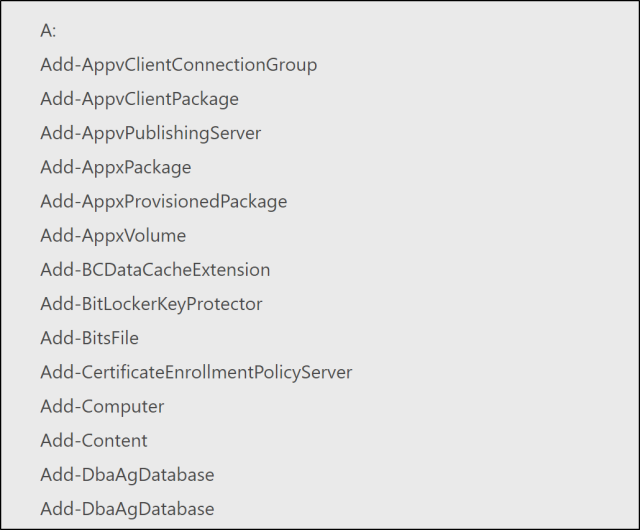

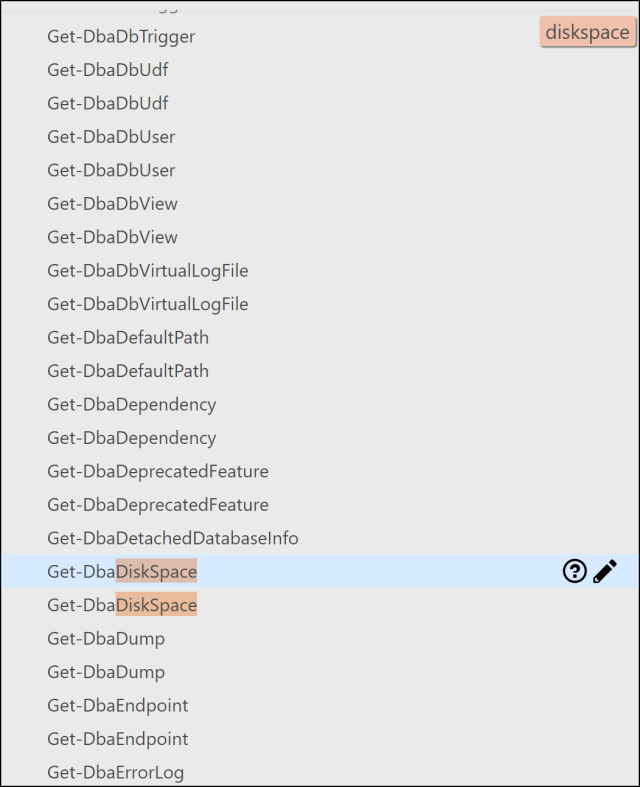

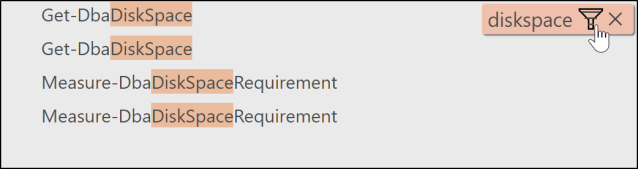

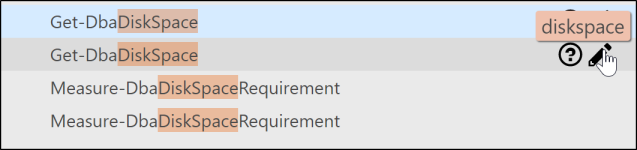

12) Azure Data Studio -The gift that keeps on giving – Even easier filtering for files

13) Less AzureDataStudio more Yorkshire– Trip to Haworth

14) Getting the git repository- AzureDataStudio. And an offer that I might regret this one! Maybe not – link to the repository

15) This contents page.

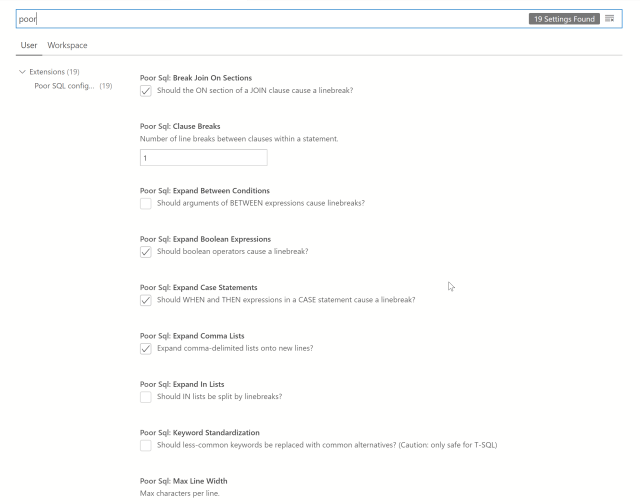

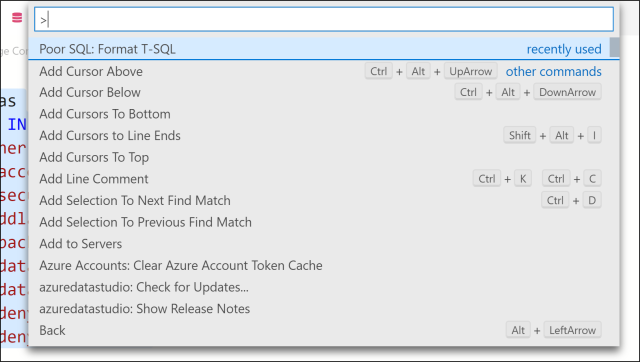

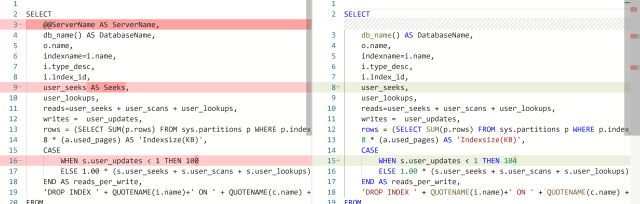

16) Azure Studio formatting TSQL – easy formatting of SQL text

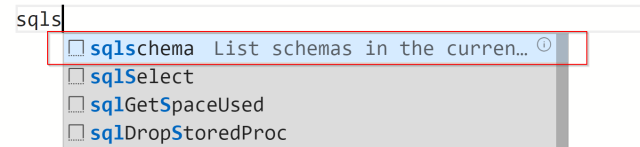

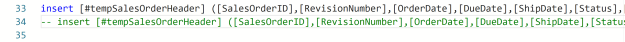

17) Azure Data Studio Snippets

18) Azure Data Studio my snippets – list of TSQL files and link to the snippets sql.json file

19) Azure Data Studio Notebook Charts – charting in Notebooks

20) Azure Data Studio – Working remotely, setting up ADS to run without installation

21) Azure Data Studio – Run book– How I utilised Notebooks for a SQL upgrade/migration

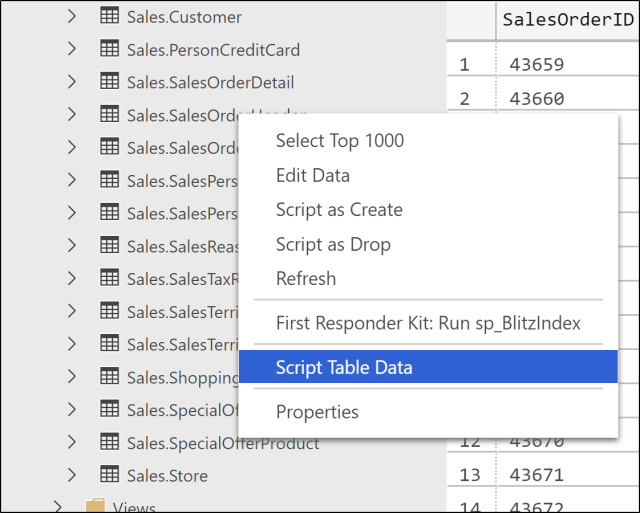

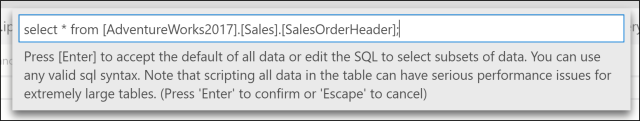

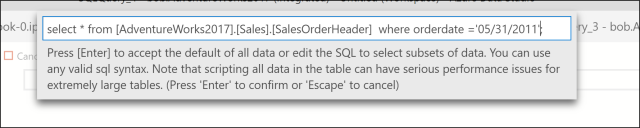

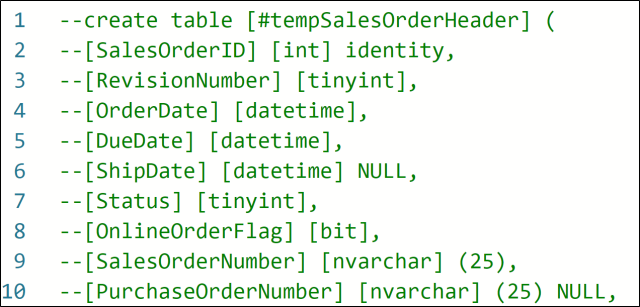

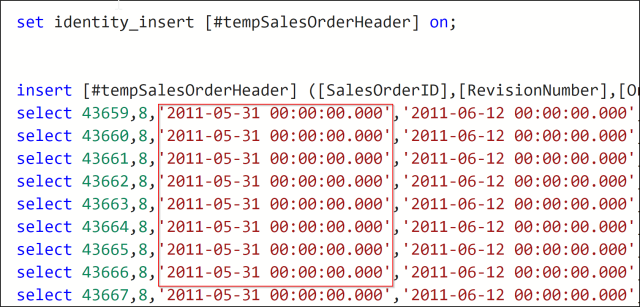

22) Azure Data Studio Data Scripter– Ability to script tables to insert statements and specify where clauses

23) Azure Data Studio– More short cut keys – duplicating lines, compare clipboard to the active window

24)

25)

26)

27)

28)

29)

30)

31)

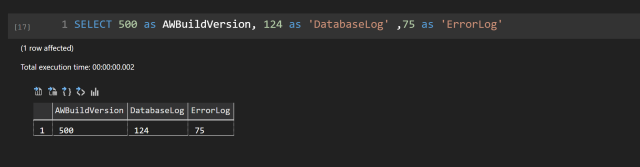

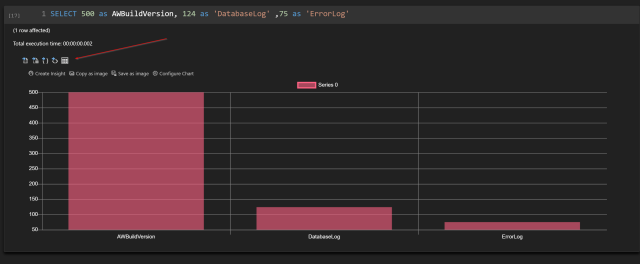

Just above the results, you can see the options to export to CSV, EXCEL, JSON and also at the end create a CHART click on it and you get visualizations

Just above the results, you can see the options to export to CSV, EXCEL, JSON and also at the end create a CHART click on it and you get visualizations Unfortunately it doesn’t seem to allow saving in the chart format but still its early days a bit more playing to see what can be achieved, the options to export to Excel will be really helpful for runbooks

Unfortunately it doesn’t seem to allow saving in the chart format but still its early days a bit more playing to see what can be achieved, the options to export to Excel will be really helpful for runbooks Nothing better than showing by example in several easy steps. This came from trying to write a query without knowing the schema in the database, I know I’ll create a snippet.

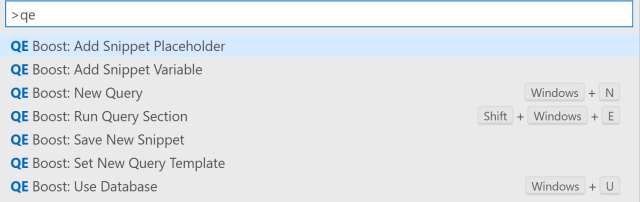

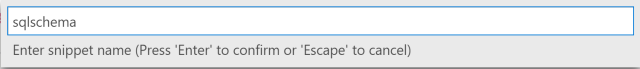

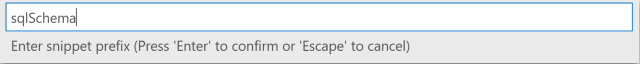

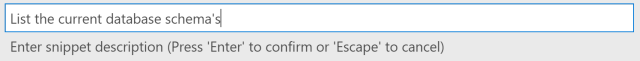

Nothing better than showing by example in several easy steps. This came from trying to write a query without knowing the schema in the database, I know I’ll create a snippet.